-

-

Notifications

You must be signed in to change notification settings - Fork 47.1k

crawl_google_results.py update, modularize, documentation and doctest #4847

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

…ocumentations and doctests

…ocumentations and doctests

Co-authored-by: Christian Clauss <cclauss@me.com>

Co-authored-by: Christian Clauss <cclauss@me.com>

|

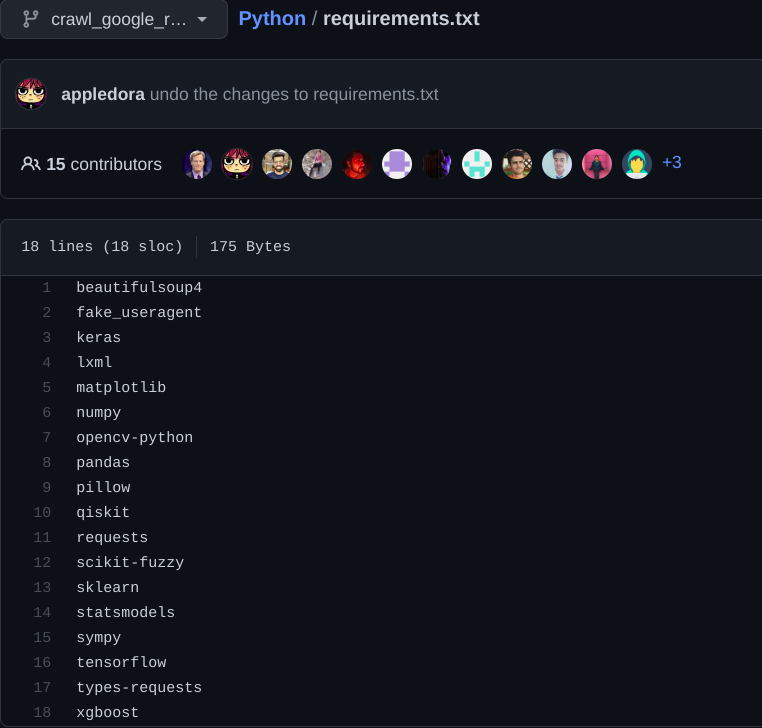

Please undo the changes to requirements.txt. |

|

I think that this is a different algorithm than the original so let's keep both. Please rename this file to |

On it! |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Click here to look at the relevant links ⬇️

🔗 Relevant Links

Repository:

Python:

Automated review generated by algorithms-keeper. If there's any problem regarding this review, please open an issue about it.

algorithms-keeper commands and options

algorithms-keeper actions can be triggered by commenting on this PR:

@algorithms-keeper reviewto trigger the checks for only added pull request files@algorithms-keeper review-allto trigger the checks for all the pull request files, including the modified files. As we cannot post review comments on lines not part of the diff, this command will post all the messages in one comment.NOTE: Commands are in beta and so this feature is restricted only to a member or owner of the organization.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Click here to look at the relevant links ⬇️

🔗 Relevant Links

Repository:

Python:

Automated review generated by algorithms-keeper. If there's any problem regarding this review, please open an issue about it.

algorithms-keeper commands and options

algorithms-keeper actions can be triggered by commenting on this PR:

@algorithms-keeper reviewto trigger the checks for only added pull request files@algorithms-keeper review-allto trigger the checks for all the pull request files, including the modified files. As we cannot post review comments on lines not part of the diff, this command will post all the messages in one comment.NOTE: Commands are in beta and so this feature is restricted only to a member or owner of the organization.

|

@cclauss , any comments on this one? |

|

@poyea I think I fixed the technical and conventional errors on this one. Could you take a look? |

Co-authored-by: John Law <johnlaw.po@gmail.com>

|

So if we add Then every single time we run the tests, it fires requests to google. And that those |

|

Please see https://github.com/TheAlgorithms/Python/blob/master/web_programming/instagram_crawler.py for what's current handling... Ideally we would have to mock the requests, but in the case we may further factor out the processing functions, and let's not test the request part. |

changing constant name Co-authored-by: John Law <johnlaw.po@gmail.com>

|

@poyea, for the sake of clarification are you suggesting I follow the coding pattern in the https://github.com/TheAlgorithms/Python/blob/master/web_programming/instagram_crawler.py ? |

Not necessarily. Now it's just a matter of how we write our test suite |

|

@poyea, forgive me, but I believe I am still a little confused about the testing requirements. :| |

|

web_programming/get_google_search_results.py:30: error: Name "headers" is not defined |

@appledora You may try to run the test locally |

|

This pull request has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

This comment was marked as off-topic.

This comment was marked as off-topic.

| } | ||

|

|

||

|

|

||

| def parse_results(query: str = "") -> list: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| def parse_results(query: str = "") -> list: | |

| def parse_results(query: str = "") -> list[dict[str, str | None]]: |

Annotate the list elements' type

| next_page = [] | ||

|

|

||

| for item in table_data: | ||

| next_page.append(item["href"]) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| next_page = [] | |

| for item in table_data: | |

| next_page.append(item["href"]) | |

| next_page = (item["href"] for item in table_data) |

| new_link = BASE_URL + next_page_link | ||

| try: | ||

| response = requests.get(new_link, headers=HEADERS) | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| if filename == "": | ||

| filename = query + "-query.txt" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| if filename == "": | |

| filename = query + "-query.txt" | |

| if not filename: | |

| filename = query + "-query.txt" |

| if filename == "": | ||

| filename = query + "-query.txt" | ||

| elif not filename.endswith(".txt"): | ||

| filename = filename + ".txt" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| filename = filename + ".txt" | |

| filename += ".txt" |

| if query == "": | ||

| query = "potato" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| if query == "": | |

| query = "potato" | |

| if not query: | |

| query = "potato" |

| >>> write_google_search_results("python", "test") != None | ||

| True | ||

| >>> write_google_search_results("", "tet.html") != None | ||

| True | ||

| >>> write_google_search_results("python", "") != None | ||

| True | ||

| >>> write_google_search_results("", "") != None | ||

| True | ||

| >>> "test" in write_google_search_results("python", "test") | ||

| True | ||

| >>> "test1" in write_google_search_results("", "test1") | ||

| True | ||

| >>> "potato" in write_google_search_results("", "") | ||

| True |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Make the doctests more strict by testing the outputs for equality:

| >>> write_google_search_results("python", "test") != None | |

| True | |

| >>> write_google_search_results("", "tet.html") != None | |

| True | |

| >>> write_google_search_results("python", "") != None | |

| True | |

| >>> write_google_search_results("", "") != None | |

| True | |

| >>> "test" in write_google_search_results("python", "test") | |

| True | |

| >>> "test1" in write_google_search_results("", "test1") | |

| True | |

| >>> "potato" in write_google_search_results("", "") | |

| True | |

| >>> write_google_search_results("python", "test") == "test.txt" | |

| True | |

| >>> write_google_search_results("", "tet.html") == "tet.html.txt" | |

| True | |

| >>> write_google_search_results("python", "") == "python.txt" | |

| True | |

| >>> write_google_search_results("", "test1") == "test1.txt" | |

| True | |

| >>> write_google_search_results("", "") == "potato.txt" | |

| True |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Closing this PR because the code no longer works. I tried running this file locally and it wasn't able to get any Google results. I believe this is because the CSS identifiers have changed, so this file is no longer able to find the results in the HTTP response.

Added large changes to web_programming/crawl_google_results.py with

documentationsanddoctests. Modularized the script and made it more customizable. Fixed formatting usingblackandflake8.Checklist:

Fixes: #{$ISSUE_NO}.